Tucked inside the Communications Decency Act (CDA) of 1996 is one of the most valuable tools for protecting freedom of expression and innovation on the Internet: Section 230.This comes somewhat as a surprise, since the original purpose of the legislation was to restrict free speech on the Internet. The Internet community as a whole objected strongly to the Communications Decency Act, and with EFF’s help, the anti-free speech provisions were struck down by the Supreme Court. But thankfully, CDA 230 remains and in the years since has far outshone the rest of the law.

Source: Section 230 of the Communications Decency Act | Electronic Frontier Foundation

I just read a TechDirt article condemning CBS’ 60 Minutes for disinformation regarding Section 230, which led me to the EFF’s page and infographic.

I respect the EFF immensely, but I remain unconvinced.

The EFF claims that if we didn’t have Section 230, places like Reddit, Facebook, and Twitter would effectively be sued out of existence. Or, even if they don’t get sued out of existence, they’ll have to hire an army of people to police the content on their site, the costs of which will drive them out of existence, or which they will pass on to users.

I don’t see what’s so valuable about Reddit, Facebook, or Twitter that these places should be protected like a national treasure. All three are proof positive that allowing every person to virtually open their window and shout their opinions into the virtual street is worth exactly what everyone is paying for the privilege: nothing. It’s just a lot of noise, invective, and ad hominem. And if that were the extent of the societal damage, that would be enough. But all of this noise has fundamentally changed how news organizations like 60 Minutes work. Proper journalism is all but gone. In order to compete, it’s ALL just noise now.

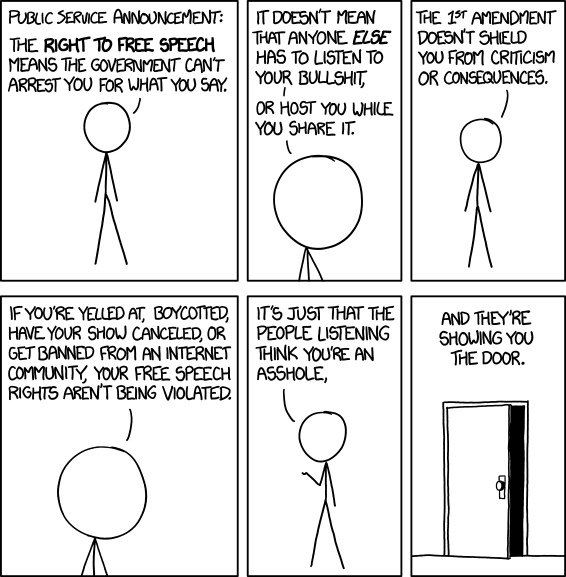

The EFF compares a repeal of Section 230 to government-protecting laws in Thailand or Turkey, but this is every bit as much disinformation as TechDirt claims 60 Minutes is promulgating. Repealing Section 230 would not repeal the First Amendment. People in this country could still say whatever they wanted to about the government, or anything else. Repealing 230 would just hold them personally accountable for it. And I struggle to understand how anyone — given 20 years of ubiquitous internet access and free platforms — can conclude that anonymity and places to scrawl what is effectively digital graffiti has led to some sort of new social utopia. The fabric of society has never been more threadbare, and people shouting at each other, pushing disinformation, and mistreating others online 24×7 is continuing to make the situation worse.

Platforms are being used against us by a variety of bad actors. The companies themselves are using our information against us to manipulate at least our buying behavior, and selling our activity to anyone who wants to buy it. There was some amount of alarm raised when it was discovered that AT&T tapped the overseas fiber optic cables for the NSA, in gross and blatant violation of the Fourth Amendment, but once discovered, Congress just passed a law to make it legal, retroactively. Now the NSA and FBI doesn’t need to track us any more. Literally every company in America which has a web site is helping to collate literally everything we do into a dossier that gets amalgamated and traded by 3rd-party information brokers. Our cell companies and ISP’s merge location tracking into the mix, and the government picks this information up for pennies on the dollar for what it would take for them to collect it themselves.

I don’t like this situation. I think it should stop. I think anything that would put a dent in Facebook, Twitter, and Reddit being able to collate and track everything anyone does on the internet, and sell it to anyone with a checkbook, needs to go away. If repealing Section 230 forces these companies out of business, I say, “Good.” They want to tell me that the costs to deal with content moderation in a Section 230-less world would put them out of business. I call BS.

If Facebook and YouTube can implement real-time scanning of all video being uploaded to their sites, and block or de-monetize anything containing a copyrighted song within seconds, they can write software to scan uploaded content for offensive content too. Will it catch everything? Of course not, but it will get the load down to the point where humans can deal with it.

There are countless stories of how Facebook employs a small army of content moderators to look into uploaded content, and how it pays them very little, and the job of scanning the lower bounds of human depravity is about as grinding a job in the world. But if they can create filters for pornographic content, they can create filters for gore and violence, and, again, stop 90% of it before it ever gets posted.

Don’t tell me it’s impossible. That’s simply not true. It would just cost more. And, again, if it costs so much that it puts them out of business? Well, too bad. If the holy religion of Capitalism says they can’t sustain the business while they make the effort to keep the garbage off their platforms, then I guess the all-powerful force of The Market will have spoken. The world would be better off without those platforms.

I remember an internet that was made of more than 5 web sites, which all just repost content from each other. It was pretty great. People would still be free to host a site, and put whatever they wanted to on it. It couldn’t be any easier, these days, to rent a WordPress site now, and post whatever nonsense you want, like I’m doing right here. You could even still be anonymous if you want. But your site would be responsible for what gets posted. And, if it’s garbage, or it breaks the law, you’re going to get blocked or taken down. As so many people want to point out in discussions of being downvoted for unpopular opinions, The First Amendment doesn’t protect you from being a jerk.

Facebook, Twitter, Reddit, Imgur, and Google are all being gamed. As the last two Presidential elections have shown, world powers are influencing the content on these sites, and manipulating our national political discourse. This needs to stop. It seems to me that repealing Section 230 would cause those platforms to get serious about being transparent about where that content comes from, and be held accountable for it. Again, don’t tell me that they can’t. They just don’t want to spend the money to do so. In fact, they’re making money on the spread of such propaganda. Tell me why Americans should put up with these mega-companies making billions providing a platform to be used against us politically? Not just allowing it, but being financially incentivized into providing it? It doesn’t make any sense to me.

Facebook, Twitter, Reddit, Imgur, and Google are all being gamed. As the last two Presidential elections have shown, world powers are influencing the content on these sites, and manipulating our national political discourse. This needs to stop. It seems to me that repealing Section 230 would cause those platforms to get serious about being transparent about where that content comes from, and be held accountable for it. Again, don’t tell me that they can’t. They just don’t want to spend the money to do so. In fact, they’re making money on the spread of such propaganda. Tell me why Americans should put up with these mega-companies making billions providing a platform to be used against us politically? Not just allowing it, but being financially incentivized into providing it? It doesn’t make any sense to me.

In summary, I don’t see how repealing Section 230 hurts any of the scenarios that folks like the EFF say that it does, and it would seem to hold all the right people accountable for the absolute disgrace that social media has become.