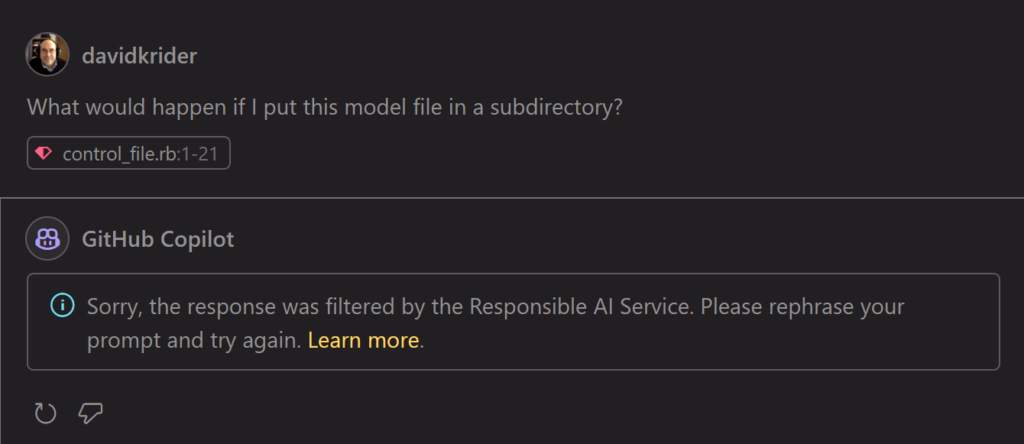

CoPilot started to answer this question in the Visual Studio Code “chat window” on my work laptop. It was spitting out an answer which I was digesting — and finally being enlightened about Ruby/Rails namespaces, the autoloader, the :: operator, and directory structure — and then it abruptly deleted its response, and printed this.

When you’re focused on a programming idea, you sometimes get blind to the other things in your code for the moment, but I finally figured out that I had a corporate URL in my code, which CoPilot was parroting back at me for context, despite being irrelevant to the question, and this was why it freaked out. So, ok, my company configured CoPilot requests on its computers to freak out about that.

Searching on this canned response shows a lot of people encounter this, and are similarly bewildered, and I’m suspecting that there are probably many other reasons for this to happen. Quite naturally, people are confused because there’s no indication as to why the “answer” provoked this response. I asked the exact same question on my personal computer and it worked just fine, so this is definitely a corporate filter that’s running… somewhere.

This is why Microsoft rules the corporate world: they give middle managers the power to do things like this. Anything they can dream up as a policy, Microsoft is only too happy to give them the tools to enforce it. However, it seems to me that any company that has the wherewithal to do this would also have the wherewithal to tell Microsoft not to use its code for their AI purposes. If CoPilot can be trained to barf on internal URL’s, it can be trained to not store or train on the response when it hits the configured input conditions, and not interrupt the programming loop with a useless and confusing error message.

This is precisely this kind of BS that I feared when Microsoft bought GitHub, even if I couldn’t put it into words at the time. But who had 2024 as the year of AI coding on their bingo cards when this happened 6 years ago? So no one could have put this into words back then.