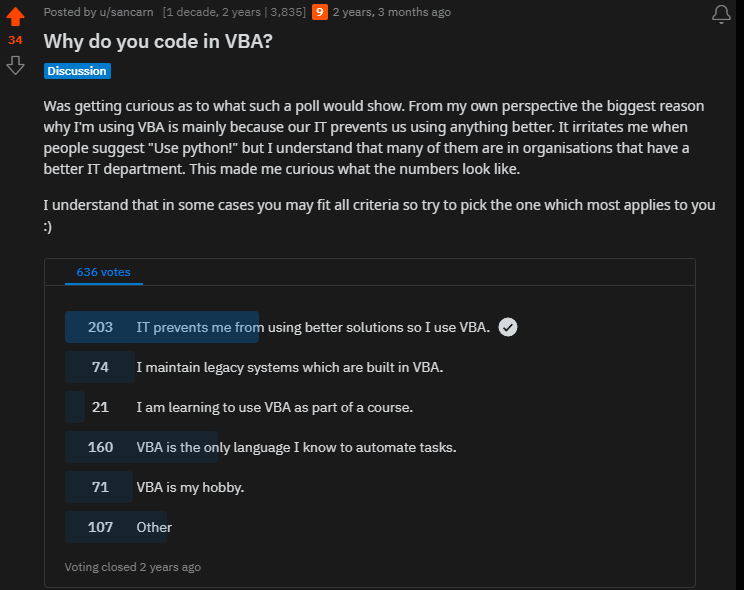

Another Twitter post led me to this Reddit post:

Further down in the comments, OP had this to say:

I copy-paste all of this for full context, but I want to emphasize this paragraph:

It tried a bunch of things before it had the breakthrough, but as you probably know it always said: “I found it! This is finally the root case of the problem!” but every AI does that on almost every prompt, so it wasn’t anything special. It was just another thing it did that I tested and noticed it worked without also regressing other stuff, and then I looked at it and compared it, and then realized what it did. Then I had to go and delete a bunch of other unnecessary changes that Opus also did that it insisted was good to leave in and it didn’t want to remove, but wasn’t actually pertinent to the issue.

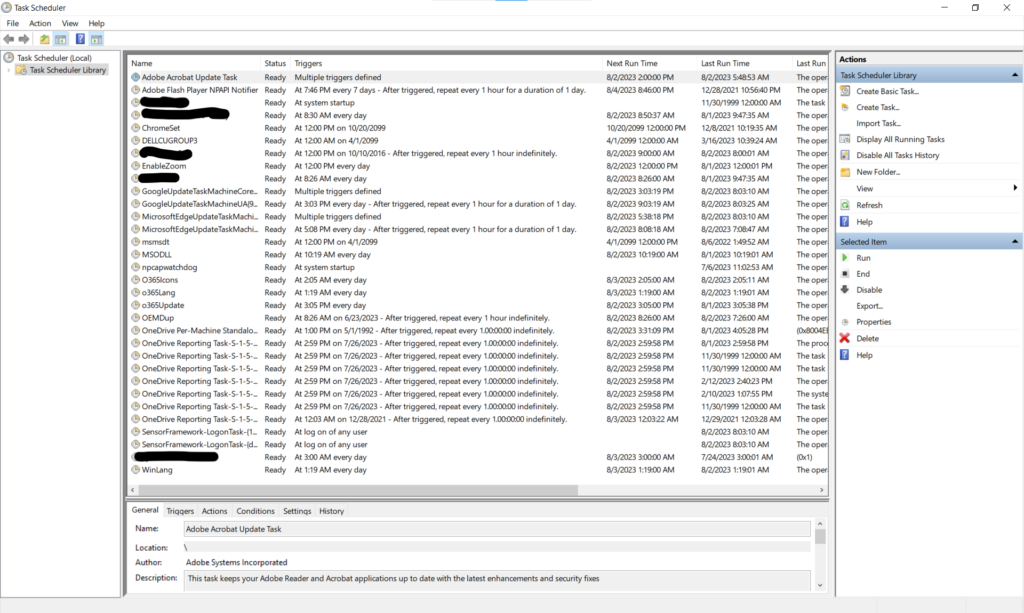

Now, I make this sentiment on various social media sites so often that I have it as a shortcut on Mac and iOS: when I type, “reddits”, it autocompletes to say, “Reddit is a CCP-funded deep state psyop against a very specific demographic, and nothing on it should be taken at face value.” But in this case, it rings authentic. I deal with this most every day at this point.

A few years ago, a few Google searches would dig up StackOverflow answers and personal blog posts that specifically dealt with whatever you were asking about. But within the span of a few years, Google has become almost worthless for searching on programming problems. It’s lucky that LLM’s have come along now, or this work would be that much harder. I’d suggest that Google let their search product slide into terribleness in order to push people to their AI product, but they don’t have one yet, so their awfulness can just be ascribed to basic late-stage capitalism and utterly-predictable monopolistic malfeasance.

Anyway, this last quote is so appropriate. AI can’t figure out if what it did actually worked, but it always says it does, and when you see a move in the right direction, you have to figure out what part made it work, and then delete all the other stuff that the AI broke in the process. In this regard, it is exactly like a guy I used to work with who would break half a dozen things on my servers before he got something working. He never cleaned up his failed attempts. Every time he told me he had done something, I’d ask a few questions about his process, and then just quietly go put things back in order.

I just went through this process over the last weekend. I’m trying to move a codebase from Rails 6 to Rails 8. There’s a lot of changes in the way Rails handles Javascript between these two versions, from the bundling to the running. I’ve gotten left behind on a lot of this. Even when I spun up this Rails 6 app six years ago, I was using the old asset bundling technique from Rails 3/4. I was happy to make the jump to “no-build” in 8 all at once, but my application servers needed upgrading from a version of Ubuntu which is no longer getting security updates. This upgrade forced me into upgrading NodeJS. This upgrade broke the asset building process in Rails because the dreaded SSL upgrade has moved to this part of the stack now. So I moved to Webpacker, which took way too long to work out. I tried to use AI throughout, but it was of almost no help at all.

After finally getting moved to Webpacker, just in time to move to ImportMap, I have had to tackle how Stimulus, Turbo, and Hotwire work. Rails 7 focused on Turbolinks, which utterly broke the Javascript datatable widget, AgGrid, which I use all over the site, so I removed Turbolinks from my Rails 6 app, and never upgraded to 7. Now I’m learning how to do things in the new “Rails way,” and AI has been helpful… in precisely the same way that this Reddit poster describes. I’ve had to run many prompts and tweak and cajole it’s “thinking,” but I finally got a really neat and tiny solution to a question… All while verifying the approach with videos from GoRails. (Which I subscribed to just to make this turn of learning.)

After I had a working function to a particular feature I wanted, I had an “aha!” moment. I could see what all this new tooling and plumbing was about. I felt a little foolish, because it winds up just being a way to move the Javascript you need out of the view code. That’s a Good Thing (TM), but I couldn’t see the forest for the trees until that moment.

And even after this success, I’m plagued with a more philosophical question. The way that Claude factored the feature I wanted was all Javascript. Meaning, once the view was loaded, it dealt with the interactivity without going back through a server-side render step. It relied on the browser doing the DOM manipulation. Which is the “old” way of doing things, right? I asked it to specifically use Turbo streams to render the HTML that the browser could use to simply replace the div, and it said, “Oh, yes, right, that’s the more idiomatic way to do this in Rails,” and gave me a partial that the Stimulus controller use to do the same thing. But now I have a clean, one-file, entirely-Stimulus approach, versus having extra calls to format_for in a controller, a turbo-stream ERB file, and a partial. Seems to me like too much extra to make things idiomatic.

Also, when I asked Claude for this refactor, it broke the feature. So now I have to figure out if I want to fix the turbo-stream approach to keep things “the Rails way,” or just let Stimulus handle the whole thing. I think I will be using turbo-streams to refresh data in my AgGrid tables, but I think I’ll let Stimulus do all the work when it can. It keeps things simpler, and it’s basically what I was doing before anyway.

I want to go back to what I was saying before about how you have to “clean up” after the AI. This is critically important, and it’s a problem in the making that I’m hoping corporate managers figure out before it becomes permanent. If you hire juniors and expect them to produce code like seniors with AI, you’re going to wind up with a bunch of instability because of extraneous code that AI leaves behind. I expect that this problem is too “viral” to survive. I don’t think an actual, useful, non-trivial application could last very long being vibe-coded. It would start to teeter, and people would hit it with more AI, and it would fall over and shatter, and then they’d get to keep all the pieces. I worry that current applications will be patched here and there by juniors who don’t clean up the mess left behind, and these errors will accumulate until the codebase is so broken that…

Oh, for Pete’s sake. What am I even saying!? The same thing will happen here that has been happening for 40 years of corporate IT development: systems get so wonky and unmaintainable and misaligned that new middle managers come in, pitch senior management into doing a massive system replacement, spend twice as much time and three times as much money as they said it would take, launch the system with dismal performance and terrible UI, piss everyone off, polish their resume, get a new job, and leave the company and everyone in it holding the bag with the accretion their terrible decisions made by committee over years.

AI will change absolutely nothing about this. The problem isn’t technology, or code, or languages, or databases, or API’s, or anything else. The problem is people. It’s always BEEN people. I’m not clear that it ever NOT be about people.