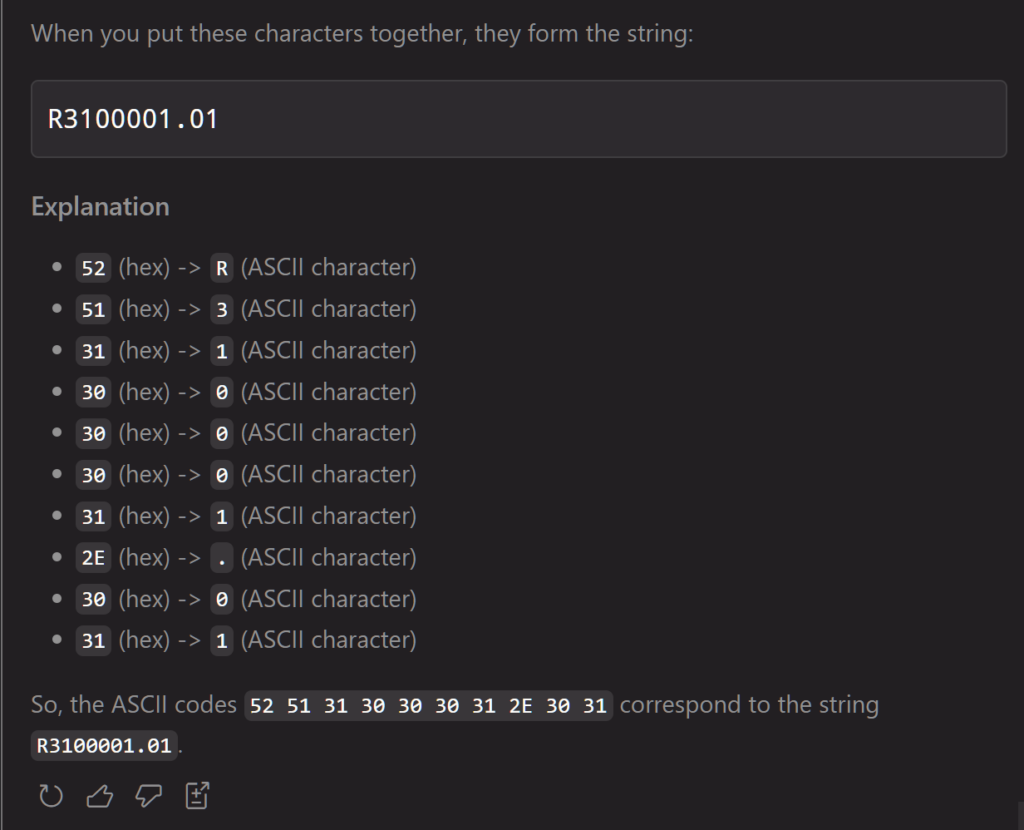

Eric Raymond, another bright star in the programming universe, weighed in on the actual capability of current-gen “AI.” He echoed DHH and Carmack, again reiterating my own opinion that LLM’s cannot replace humans at (non-trivial) programming. Yet. Sure, it can make a single function or a web page, but even then you’ll have to fix things so that it doesn’t accumulate error into the project.

Maybe better “meta-LLM’s,” with more specialist subsystems, will be able to do better, but we really already have them. It’s not a difference in degree, but of kind. We will need to come up with some other technology before AI supplants humans at programming. Maybe the next step is AGI, maybe there’s a couple more intermediate developments before that becomes a reality.

At this point, it should be becoming clear that people who are obsequiously bullish on how AI is going to replace all your programmers at your company are grifting. As the line in the Princess Bride says, “Anyone who says differently is selling something.”